ChatGPT has attracted a lot of attention, captivating the minds of tech enthusiasts and other professionals alike. The OpenAI chatbot, powered by Generative AI, uses human-like, natural language to answer questions. Its growth has been remarkable, with an exponential surge in user adoption. Within just five days of its release, over a million users logged into the platform, making it one of the fastest-growing user bases for any platform.

The world is buzzing with excitement as businesses have found ChatGPT’s utility in countless domains. From marketers to educators, customer service agents to healthcare professionals, people from all walks of life have discovered valuable use cases for this remarkable AI technology. Even in the legal domain, ChatGPT’s language processing prowess is leveraged to analyze contracts, identify risks, streamline the drafting process, and compare software.

ChatGPT is truly impressive and provides informative answers most of the time. But let’s not forget, it can occasionally be wrong.

It lacks accuracy, freshness, verified data, and contextual understanding, often resulting in misleading and biased outcomes. When it comes to the document and contract industry, relying solely on ChatGPT poses big challenges.

Due to its shortcomings, it’s important to exercise caution when considering ChatGPT responses. At this stage, we need human involvement and expertise to validate and support the data generated by ChatGPT.

Let’s explore the specific limitations of generative AI when it comes to eSignatures and contract workflows. In the spirit of this article’s topic, we asked ChatGPT a couple of questions, and our analysis is shared below.

1. ChatGPT output is based on outdated information

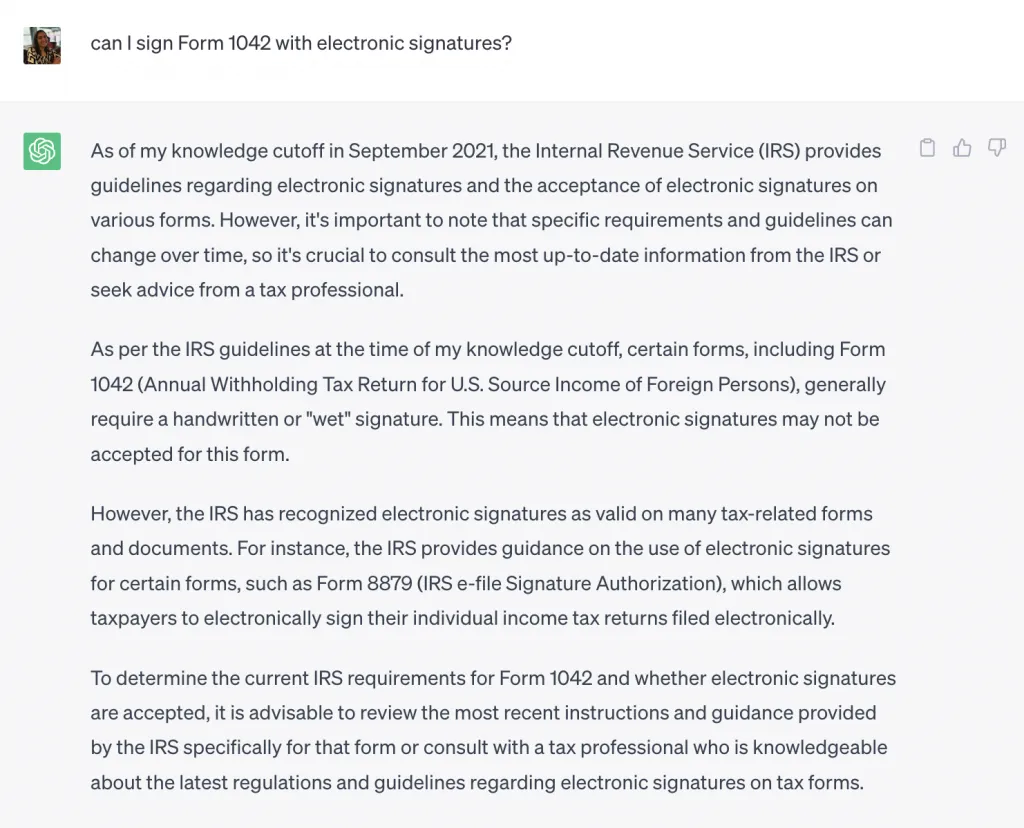

The large language models (LLMs) used to train Generative AI for ChatGPT don’t have the most recent information or updates because they were trained on data only up until September 2021. This means that when it comes to generating content that requires current knowledge, there can be inaccuracies.

For instance, we asked ChatGPT if a person could sign Form 1042 with electronic signatures. Since the IRS updated this information on December 8, 2021, ChatGPT provided an inaccurate answer.

Depending solely on ChatGPT for current events may result in outdated and incorrect information. That’s why, we must verify up-to-date information with reliable sources to ensure compliance and accuracy in contract workflows.

2. ChatGPT output is biased and misleading

The generative AI models used by ChatGPT heavily rely on the data they are trained on, often sourced from public links on the Internet. The models learn from the training data to understand how things are related. When given a prompt, the model predicts the next words based on what it has learned. It generates output by selecting the most likely words or phrases using its knowledge. Since the training dataset is susceptible to biases or inaccuracies, the outputs could also exhibit biases.

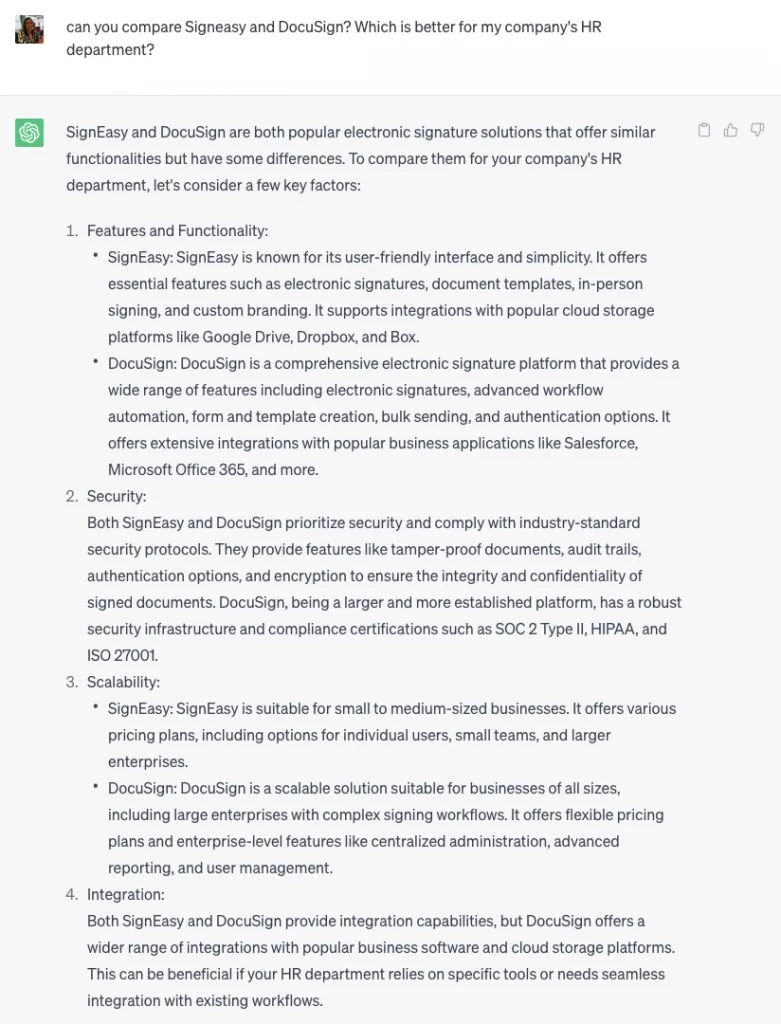

We asked ChatGPT to compare Signeasy with DocuSign and asked which was a better option for the HR department. As you can see, the response shows a biased view. Without complete knowledge, one would mistakenly believe that Signeasy doesn’t provide integration with Salesforce or Microsoft 365. Or that Signeasy is not SOC 2 Type II and HIPAA compliant. However, in reality, Signeasy offers all these features, along with superior support.

Moreover, the response is not consistent. Every time you ask the same question, ChatGPT’s answer changes. It is vital to be aware of these limitations and validate the information with reliable sources to make informed choices.

3. ChatGPT output lacks contextual understanding and is unverifiable

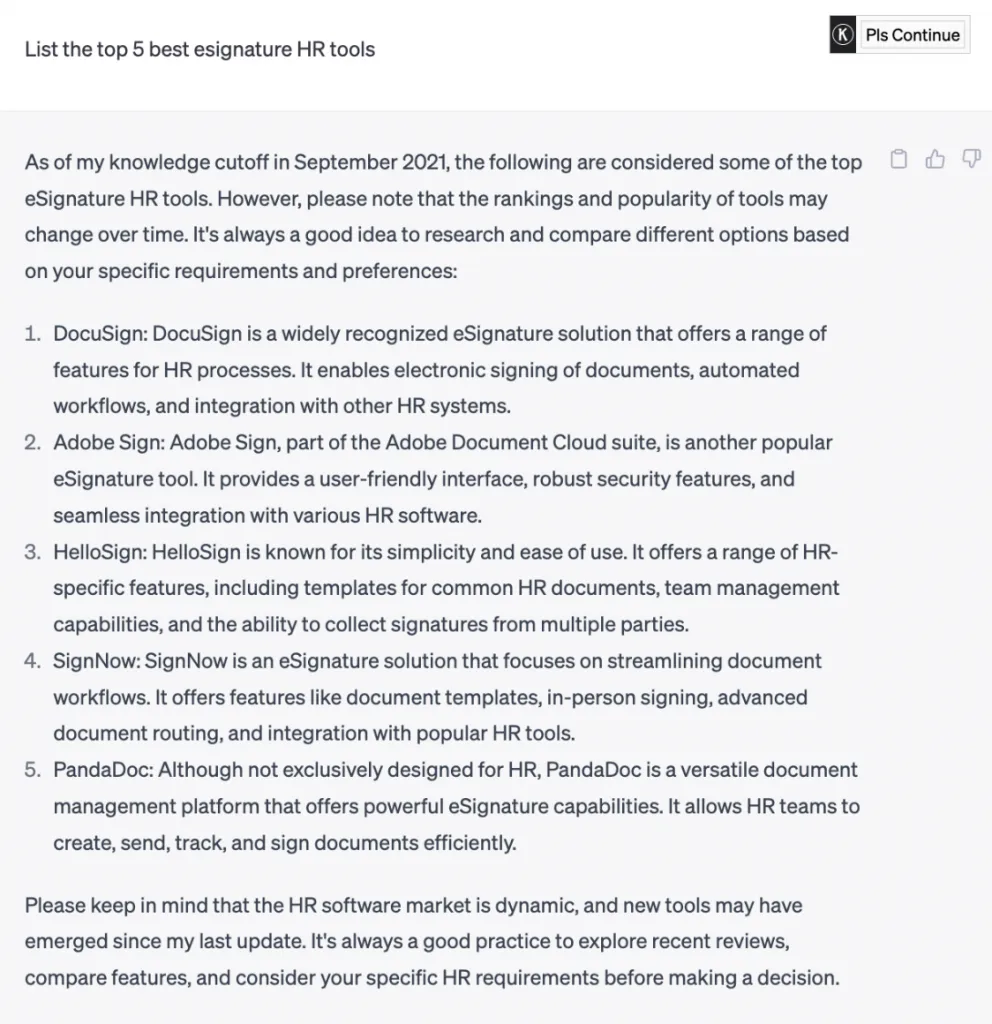

ChatGPT has a hard time understanding context and nuance, making it difficult to determine its accuracy. When we asked it to list the top 5 eSignature tools for the HR department, it listed PandaDoc, although it’s not designed for HR use cases. It clearly shows that the model struggles to grasp the specific requirements and legal implications. For a more reliable evaluation, you can check our PandaDoc vs DocuSign comparison based on verified features and real use cases.

ChatGPT’s generated output often lacks proper citations and references to reliable sources. In cases where it does provide a source, the link is frequently broken or invalid, rendering the information unreliable. This makes it challenging to verify the accuracy and credibility of the information presented by ChatGPT.

Note: Because the output cannot be cited, platforms like Stack Overflow have implemented bans on ChatGPT-generated answers.

What’s next: embracing generative AI with caution

Generative AI has great potential. Think automating routine contract drafting tasks, extracting clauses with greater efficiency, or even analyzing large volumes of legal documents for risk assessment. But it requires a cautious and responsible approach.

ChatGPT’s output mimics patterns, and results in inaccuracies, misinterpretations, and outputs that are not suitable for the intended purpose. This limitation hampers their overall accuracy and reliability, highlighting the need for human expertise.

In the electronic signature and contract workflow industry, executives must carefully consider the practical and ethical implications of using generative AI tools like ChatGPT for tasks like contract drafting and analysis.

The US Federal Trade Commission (FTC) has emphasized holding organizations accountable for promoting bias or inequities through AI. The European Union also suggests strict AI regulations with significant fines for violations. These regulations underscore the growing importance of AI practices and the potential consequences of non-compliance.

Moving forward, a collaborative approach that combines the strengths of both humans and AI is crucial in the contract workflow industry. By leveraging the capabilities of generative AI while working alongside human expertise, organizations can achieve optimal results, ensuring accuracy, context awareness, and unbiased output. This partnership between human intelligence and AI technology will drive innovation, enhance productivity, and enable the industry to navigate the evolving landscape of contract management effectively.